p i = – c i + p i = p i – c iĪs seen, derivative of cross entropy error function is pretty. However, we’ve already calculated the derivative of softmax function in a previous post.

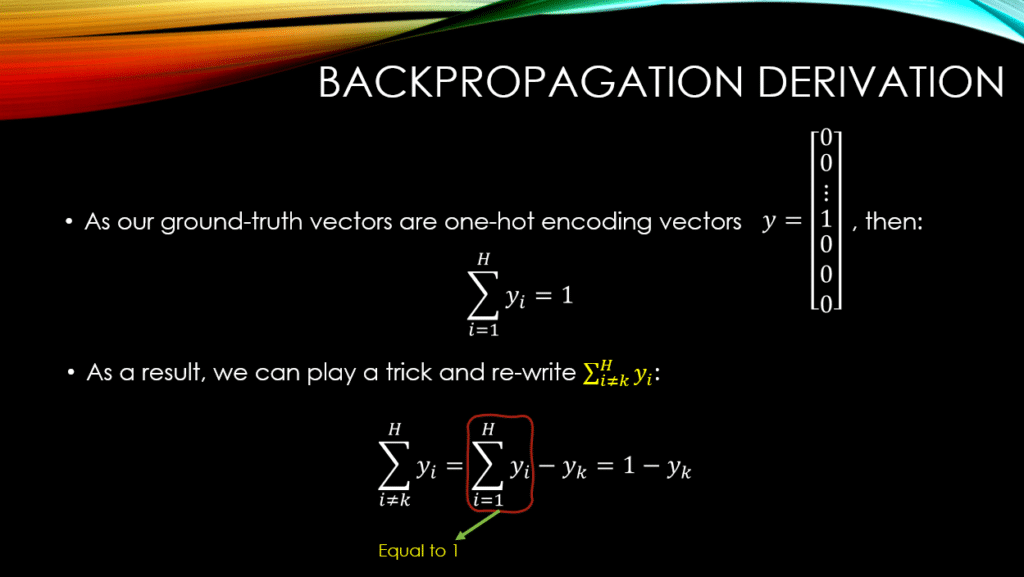

Now, it is time to calculate the ∂p i/score i. Notice that derivative of ln(x) is equal to 1/x. Only bold mentioned part of the equation has a derivative with respect to the p i. Now, we can derive the expanded term easily. ∂E/∂p i = ∂(- ∑)/∂p i Expanding the sum term Let’s calculate these derivatives seperately. We can apply chain rule to calculate the derivative. That’s why, we need to calculate the derivative of total error with respect to the each score. Cross entropy is applied to softmax applied probabilities and one hot encoded classes calculated second.

Notice that we would apply softmax to calculated neural networks scores and probabilities first. PS: some sources might define the function as E = – ∑ c i . log(1 – p i)Ĭ refers to one hot encoded classes (or labels) whereas p refers to softmax applied probabilities. First, well configure our model to use a per-pixel binary crossentropy loss. Things become more complex when error function is cross entropy.Į = – ∑ c i . For measuring the reconstruction loss, we can use the cross-entropy (when. If loss function were MSE, then its derivative would be easy (expected and predicted output). We need to know the derivative of loss function to back-propagate. Herein, cross entropy function correlate between probabilities and one hot encoded labels.Īpplying one hot encoding to probabilities Cross Entropy Error Function Finally, true labeled output would be predicted classification output. That’s why, softmax and one hot encoding would be applied respectively to neural networks output layer. Also, sum of outputs will always be equal to 1 when softmax is applied. After then, applying one hot encoding transforms outputs in binary form. entropyĪpplying softmax function normalizes outputs in scale of. We would apply some additional steps to transform continuous results to exact classification results. However, they do not have ability to produce exact outputs, they can only produce continuous results. NOTE: As per comment, the offset of 1e-9 is indeed redundant if your epsilon value is greater than 0.Neural networks produce multiple outputs in multi-class classification problems. Also, I added 1e-9 into the np.log() to avoid the possibility of having a log(0) in your computation. Here, I think it's a little clearer if you stick with np.sum().

What is cross entropy code#

So your code could read: def cross_entropy(predictions, targets, epsilon=1e-12):Ĭe = -np.sum(targets*np.log(predictions+1e-9))/N The average number of bits required to send a message from distribution A to distribution B is referred to as cross-entropy. Assume the first probability distribution is denoted by A and the second probability distribution is denoted by B. You're not that far off at all, but remember you are taking the average value of N sums, where N = 2 (in this case). Cross entropy is a measure of the entropy difference between two probability distributions. Could anybody help me to check what is the problem with my codes? UNDERSTANDING THE CONCEPT OF CROSS- ENTROPY In order to understand the concept of cross- entropy, let’s start with the. It gave 0.178389544455 and the correct result should be ans = 0.71355817782. Cross-entropy is commonly used in machine learning as a loss function. Then I print the result of cross_entropy(predictions, targets). The output of the above codes is False, that to say my codes for defining the function cross_entropy is not correct. The following code will be used to check if the function cross_entropy are correct. epsilon)Ĭe = - np.mean(np.log(predictions) * targets) Predictions = np.clip(predictions, epsilon, 1. def cross_entropy(predictions, targets, epsilon=1e-12):Ĭomputes cross entropy between targets (encoded as one-hot vectors) You can use this as a baseline before moving to more complex RL algorithms like PPO, A3C, etc.

This method has outperformed several RL techniques on famous tasks including the game of Tetris. To avoid numerical issues with logarithm, clip the predictions to range.Īccording to the above description, I wrote down the codes by clipping the predictions to range, then computing the cross_entropy based on the above formula. Cross-Entropy Method is a simple algorithm that you can use for training RL agents. Where N is the number of samples, k is the number of classes, log is the natural logarithm, t_i,j is 1 if sample i is in class j and 0 otherwise, and p_i,j is the predicted probability that sample i is in class j. I am learning the neural network and I want to write a function cross_entropy in python.

0 kommentar(er)

0 kommentar(er)